Bend It Like NASA (part 2)

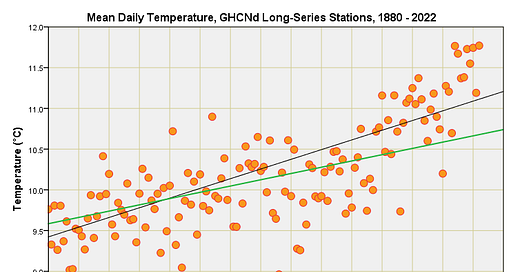

A quick look beneath the sleek exterior of super smooth global gridded temperature anomalies reveals a pretty sorry bunch of data

I left part 1 of this mini-series at a bit of a cliff hanger, promising something fancy to determine whether we are looking at a smoking gun of increasing rates of missing winter temperature records over time at 21 super-long series GHCNd stations that would serve to generate fake warming. Herewith that something fancy…

Something Fancy

Between Jan 1880 and Jul 2023 there are 1,723 monthly records in the pot and I have derived the total dodgy day count for each month. I have boiled months down into a 4-level factor representing the seasons (Dec-Feb, Mar-May, Jun-Aug, Sep-Nov) and I have also boiled 144 years down into a 5-level factor representing key collection periods: 1880-1934 (pre-war); 1935-1949 (WWII); 1950-1989 (post-war); 1990-1999 (early IPCC); 2000-2023 (hyper hysteria).

What I can do with this lot is run a generalised linear model (GLM) using a Tweedie distribution to determine if the dodgy day count varies significantly across these two factors (main effects) as well as their…